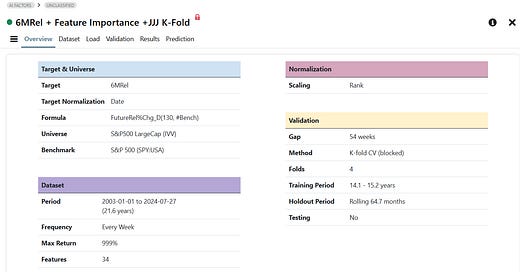

Let us say we have the following variables for an AI Factor Model (build on portfolio123.com).

Now what will happen in a K-fold CV Test?

The AI Model will be able to build the portfolio for 21.6 Years (in Orange: Validation Holdout).

It builds the portfolio based on predictions of the Validation Training Periods (in green: Validation - Training).

Plus, we cross validate!

Some ChatGPT on Cross Validation with the ML Model ExtraTrees —>

“Why is K-Fold Cross-Validation Effective for Preventing Overfitting?

K-fold cross-validation is a method to prevent overfitting in machine learning models, including Extra Trees (Extremely Randomized Trees). Here's why:

1. More Reliable Performance Estimates

Instead of relying on a single train-test split, k-fold cross-validation splits the dataset into multiple train-test splits.

This ensures that the model is evaluated on different subsets of data, providing a more stable and unbiased estimate of performance.

2. Reduces Overfitting on a Single Training Set

If you train Extra Trees on just one train-test split, the model may overfit to that particular training data.

K-fold forces the model to train on different subsets and validate on different holdout sets, preventing it from memorizing patterns from a single training set.

3. Ensures Model Generalization

Overfitting occurs when a model performs well on training data but poorly on unseen data.

K-fold cross-validation tests the model on unseen data multiple times, ensuring that it generalizes well across different portions of the dataset.

4. Better Utilization of Data

With a standard train-test split, a portion of the data is never used for training.

In k-fold CV, every data point is used for both training and validation at some point, maximizing data efficiency.

5. Extra Trees & Overfitting Control

Extra Trees (Extremely Randomized Trees) are less prone to overfitting due to random feature splits, but they can still overfit if trained on small or unbalanced datasets.

K-fold CV ensures that every part of the data contributes to both training and validation, reducing the risk of the model learning noise instead of general patterns.

Example: How K-Fold Works in Extra Trees

Dataset is split into

kfolds (e.g.,k=4).The model is trained on

k-1folds and tested on the remaining fold.This process repeats

ktimes, each time using a different fold as the test set.The final performance metric is the average across all

kiterations.

Summary: Why K-Fold Cross-Validation Prevents Overfitting

More robust performance estimates

Reduces model reliance on a specific train-test split

Ensures model generalization across different subsets

Prevents the model from memorizing data patterns

Works well with tree-based models like Extra Trees”

This (K-fold CV Validation & Training) also means we can take an AIFactorValidation to test the whole time for which we loaded the data in a Portfolio Strategy.

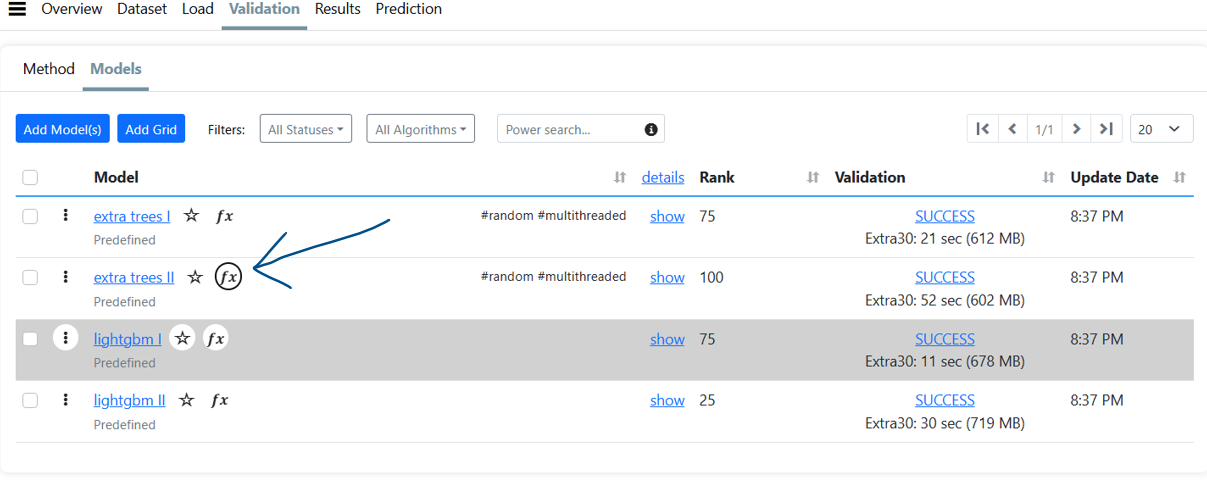

For this we take the Model(s) with the best results:

We click on the ML model (the “Fx” symbol):

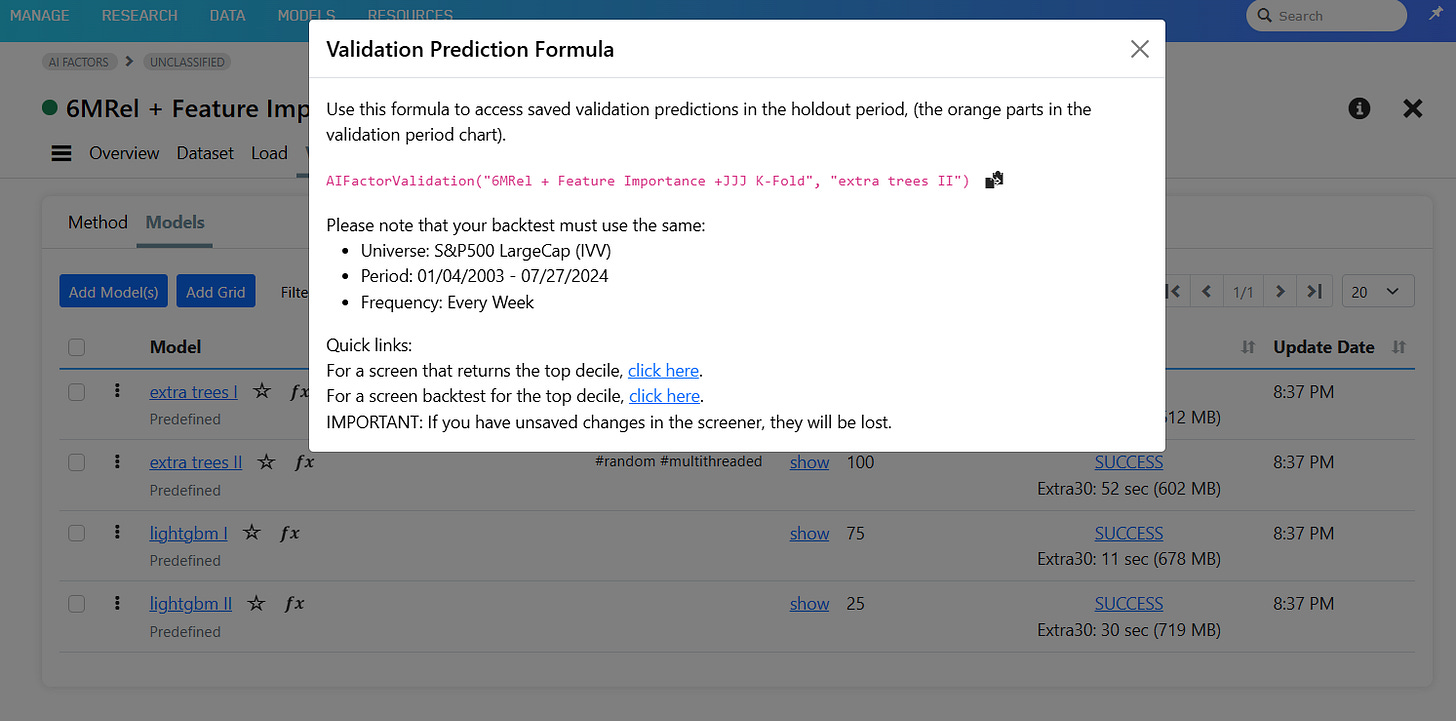

And this window pops up:

Now we copy the String: AIFactorValidation("6MRel + Feature Importance +JJJ K-Fold", "extra trees II") into a ranking system and include the ranking system in a portfolio strategy and can test the AIFactorValidation from 01/04/2003 - 07/27/2024.

And here is the Backtest Result —>

Again, the above test is still an out of sample test, because “K-fold cross-validation tests the model on unseen data multiple times”.

Yes, but for the first portfolio built it takes data from future training data to be trained. On the other hand, a K-Fold test makes sure the ML has much more Training Data.

Hmmm, still need to get my head around it…

Best Regards

Andreas