Update: Design choices in AI Factor Investment Models

Update on this blogpost

I updated AI Factor Systems (better examples) + some ChatGPT Q+A at the end of this post. Basically, I updated on the learnings of the last 10 Weeks!

Interesting Paper: Design choices, machine learning, and the cross-section of stock returns —> https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5031755

Learnings:

“the abnormal return relative to the market (RET-MKT) exhibits the highest portfolio returns”

Use relative strength as Target (for example 6 Month relative strength) if you aim total return

Use CAPM beta-adjusted as a Target if you aim for low risk (agree 100%, if you define Sortino1Y as target, the ML will capture low vola features and spit out a low vola AI Factor Portfolio Strategy).

“…the recommended target variable depends on the prediction goals.”

“If the aim is to forecast higher relative raw returns, as is common in cross-sectional stock return studies, the abnormal return relative to the market is more suitable than the excess return over the risk-free rate.” “Conversely, if the goal is to achieve high market-risk adjusted returns, CAPM beta-adjusted returns are preferable, as the feature importance shows that this target effectively captures the low-risk effect.”

”Finally, non-linear ML models significantly outperform linear OLS models only when using abnormal returns relative to the market as the target variable, employing continuous target returns, or adopting expanding training windows.”

“We document that the composite non-linear model (ENS ML) outperforms the linear model (OLS) only under the following conditions: (i) the target variable is defined as the abnormal return over the market (Target = RET-MKT), (ii) the target variable is a continuous return (Target Transformation = Raw), and (iii) an expanding training window (Window = Expanding) is used.”Agree —> but only on mid to big caps!

P123 AI Factor Model:On small caps (excluded in the paper!) —> Total return as target (3 Month!)+ LightGBM I - III gives better results, than 3-6 Month relative return as target!

P123 AI Small Cap Factor Model:Study: most important Features (Factors): “trend factor (TrendFactor), momentum (Mom12m), beta (Beta), short-term reversal (STreversal), and analyst earnings revisions (Analyst Revisions)” —> most important factors

But —> “Therefore, feature pre-selection had little improvement, and [non linear] machine learning algorithms can effectively disregard redundant features.”

Not my experience on Small Caps, if you add low volatility Features, the ML will capture them and build less aggressive lower vola systems.Most important: “In contrast, post-publication adjustments, feature selection, and training sample size have minimal impact on the outperformance of non-linear models.” Post publication factors do much less well after they have been documented, non linear ML Models can mitigate that effect (THIS IS A MONSTER LEARNING!)

Use long training periods (on validation and predictor training!)

”Based on our results, an expanding window is superior, in particular for methods that allow non-linearities and interactions.”

“These findings indicate that more complex [e.g. non linear] machine-learning models require larger training datasets to robustly capture non-linearities and interactions in the data.”•“…that aligning the training sample with the evaluation sample is sufficient.”

e.g. it is fine to train on the SP500 and then run the system on the SP500 Universe (I agree, did not have success otherwise).

All right, here you have it.

All the best in 2025 and best regards.

Andreas

P.S. Some ChatGPT —>

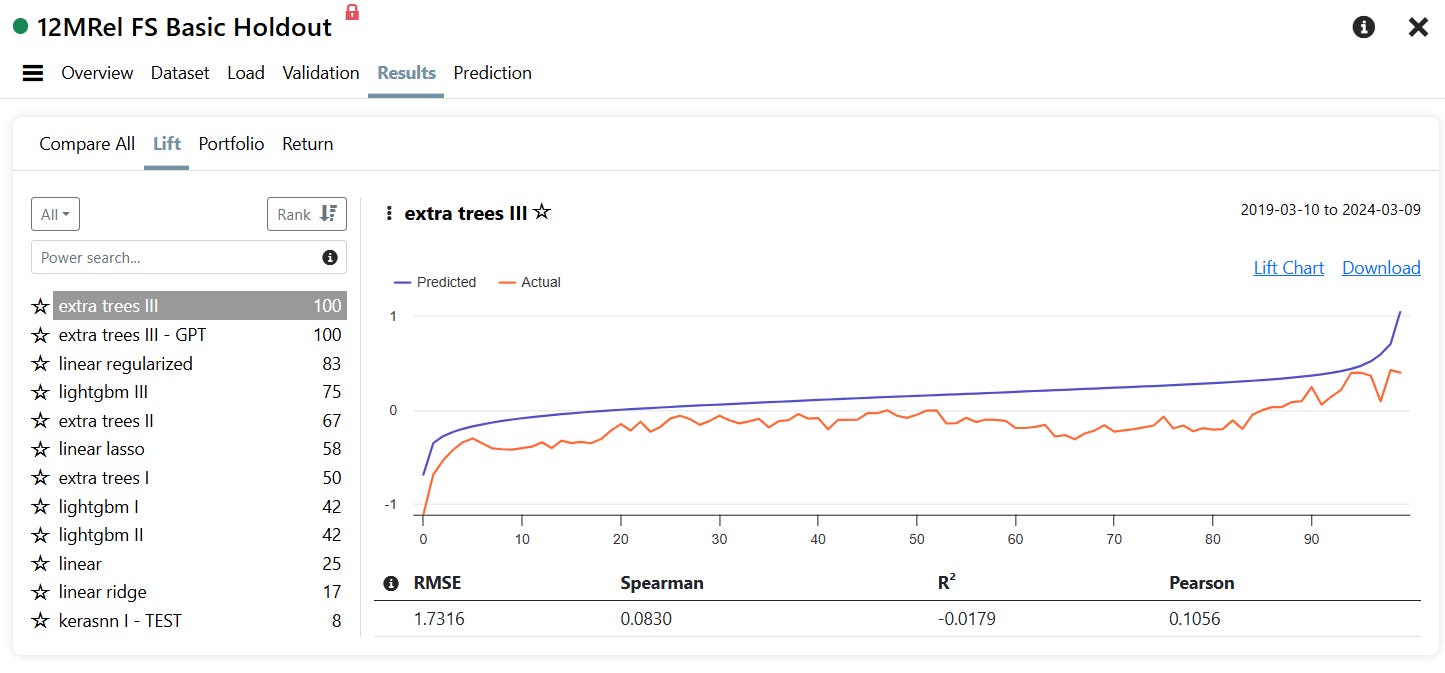

Why the Lift Chart Looks “only Okay” but OOS Performance is Strong

1. Lift Chart ≠ Portfolio Performance

The lift chart shows how well AI correlates with future returns across the whole universe. Portfolios care only about the top picks.

2. Focus on the Top-Decile

Even if the model is noisy overall, as long as the top 5–10% of picks are strong, portfolio performance will shine.

3. Buy Rule Interaction

AI alone may elevate some poor-quality names. The EPS revision filter trims these out, boosting portfolio quality.

4. Low Correlation Is Normal

Stock returns are noisy. Low R², Pearson, or Spearman scores are common. What matters is that the top-ranked ideas outperform.

5. Nonlinear Payoffs

Sometimes just a few top picks make all the difference. A couple of 50% winners can lift the whole portfolio.

6. Top vs. Bottom Spread Matters

Global lift charts might look modest, but the top 10% vs. bottom 10% spread is where real edge lies.

Conclusion:

Don’t worry if the lift chart looks "meh" — it’s the top ideas that matter, and if those outperform, the system works.

Z-Score Normalization: Date vs. Dataset

Z-Score on Date (Cross-Sectional):

Standardizes each feature across stocks at a point in time. Compares each stock to its peers.

Z-Score on Dataset (Global):

Normalizes each feature across the full history. Compares each stock to its own history or to others over time.

Why Date-Based Z-Scores Work Well on Small Caps

1. Handles Small Cap Noise

Small caps are volatile and have skewed distributions. Per-date normalization adapts to each rebalance period.

2. Better Comparability

You’re comparing each stock to its peers at that moment — more relevant than comparing to some historical mean.

3. Reduces Factor Drift

Small cap signals decay quickly. Cross-sectional normalization keeps them fresh and responsive.

4. Aligns with Portfolio Construction

Most strategies pick top-ranked stocks per date. Z-scores by date reflect real-time competitive standing.

Result:

Backtests with per-date z-scores often show better Sharpe ratios, lower turnover, and improved tracking in small caps.

Why Global (= Dataset) Z-Score Works Better on Big Caps

1. Stable Fundamentals

Big caps have consistent financials, so their historical averages are more reliable.

2. Absolute Value Matters

Investors care whether AAPL is cheap historically, not just compared to other large caps this week.

3. Strong Factor Persistence

Big cap factors hold up better over time. Global normalization captures this.

4. Less Noise

With less distortion from illiquidity or pump-and-dump activity, global z-scores become more usable.

Should You Try Z-Score on Date for Big Caps?

Absolutely — test it! It may improve timing, especially for relative strength and momentum.

Z-Score vs. Rank Across Market Regimes

Z-Score Wins In:

Deep bear markets

Post-crash recoveries

High dispersion environments

Z-scores reward extreme dislocations. They shine in mean-reversion.

Rank Wins In:

Momentum rallies

Growth-led bull markets

Low-volatility steady trends

Rank rewards consistency and relative winners.

Example:

Z-score struggled with NVDA in 2023 because it looked too extreme. Rank picked it up easily by just saying “yep, still #1.”

Why AI Factor Systems Can Do What Traditional Ranking Can’t

1. Factor Interactions

AI learns combinations like: "Value + Momentum = Alpha, but only when Volatility is low." Traditional ranking can't do that.

2. Non-linear Thresholds

AI captures conditions like “only matters if debt < 50% and growth > 20%.” Rank-based models miss these thresholds.

3. Composite Signals

AI naturally forms new synthetic factors — like “Quality + Price Stability + EPS Acceleration” — without needing to handcraft them.

4. Adaptive Regime Awareness

AI learns what works in different environments. It adapts dynamically across bull, bear, inflationary, or recession periods.

Summary:

Traditional ranking systems are static. AI Factor systems are dynamic, adaptive, and multidimensional. They do more, better — especially in complex, nonlinear markets.

Me: Wow!

ChatGPT:

Yes! It's honestly one of the most exciting parts of applying AI to quant investing — we're not just stacking factors anymore, we're letting the system discover the most powerful combinations and when to use them.

Why LightGBM is So Good on Small Caps

1. Handles Noisy Data

LightGBM thrives in chaotic, incomplete datasets — perfect for small caps.

2. Learns Interactions

It spots complex relationships like: “EPS up, only if sales growth > 10% and insider ownership high.”

3. Captures Tail Behavior

Small cap alpha often lives in the extremes. LightGBM zooms in on the edges and learns from outliers.

4. No Need for Clean Normalization

While Z-score or Rank helps, LightGBM doesn’t require it. It’s robust to messiness.

Bonus:

It’s fast, scalable, and supports feature importance metrics like SHAP for diagnostics.

Why ExtraTrees Dominates in Big Caps

1. Low Variance Learner

Big caps are less noisy — ExtraTrees benefits from lower variance and more stable splits.

2. Uniform Sampling Works Well

Random thresholds are efficient when features are cleaner, like in big caps.

3. Ensemble Effect

It benefits from the sheer volume of consistent signal across time, which big caps provide.

Why Rank Beats Z-Score with Many Features

1. Z-Score Gets Noisy with 365 Features

Too many features = higher chance of overfitting. Z-scores amplify that noise.

2. Rank Suppresses Junk

Ranking flattens bad features and preserves strong ones. It’s robust when casting a wide net.

3. Rank Handles Redundancy Better

You’re bound to have correlated signals. Ranking ignores magnitude and reduces bias from duplication.

4. Big Cap Flows Favor Rank

Capital flow in big caps rewards relative leaders. Rank captures that behavior better.

Why 3-Month Total Return (as Target in AI Factor System) Is So Strong on Small Caps, While 12-Month Relative Return Works Better on Big Caps

When designing AI Factor models or predictive strategies, the choice of target variable can make or break your system. Interestingly, one of the most consistent patterns we've seen is this:

3-Month Total Return often excels as a target for small cap models.

12-Month Relative Return tends to outperform as a target for large cap or S&P 500 universes.

Here’s why.

1. Small Caps Move Fast — And So Should the Target

Small caps are inherently high beta, high volatility, and more reactive to catalysts like earnings surprises, insider buying, or micro-level news. When a small cap stock moves, it often does so quickly — sometimes over a matter of weeks, not months.

A 3-month return target aligns better with this shorter alpha horizon. It captures:

Explosive mean-reversion after a drawdown

Quick surges from earnings or guidance upgrades

Strong price reactions to new institutional buying

These moves often exhaust themselves after a few months. If your target is too long (e.g. 12 months), the signal gets diluted — small caps just don’t follow long, steady trends as predictably as big caps do.

2. Big Caps Trend Longer — And Need Relative Context

Large caps tend to move more slowly and steadily. Their returns are often driven by:

Broad institutional flows

Sector rotation

Macroeconomic trends

Persistent earnings beats or long-term guidance upgrades

This makes 12-month return horizons more suitable — big cap alpha plays out gradually, and the strongest performers tend to stay strong across longer periods.

Also, relative return matters more in big caps, because institutional investors are often benchmarking. A stock doesn’t need to be an absolute rocket — just better than its peers. Hence, 12M relative return is a good target.

3. Short-Term Targets Capture Alpha Cycles in Small Caps

Many small caps are driven by short-lived narratives: a biotech breakthrough, a small acquisition, or a product launch. These stories often have a 2–4 month life span in the market.

A 3-month return target captures these bursts of alpha without waiting for the narrative to fizzle out or revert.

It also helps avoid the noise and decay that can occur when looking too far ahead — small cap fundamentals and investor attention can shift rapidly.

4. Big Cap Models Need Stability and Benchmarking

Big caps operate in a world of relative performance. Portfolio managers care whether MSFT outperformed AAPL, or if PEP beat KO — not necessarily whether either went up 10%.

12-month relative return targets build models that think in terms of who's winning the institutional attention war — which is exactly how big cap investing works.

Longer targets also help smooth out short-term noise, allowing models to key in on more persistent structural drivers (like profitability, earnings stability, low beta).

5. Practical Bonus: Sharper Backtests, Less Overfitting

In practice, we often see:

3M total return targets for small caps producing sharper lift charts, cleaner top-decile returns, and more mean-reverting alpha

12M relative return targets on big caps leading to stronger rank correlation, smoother drawdowns, and better OOS robustness

It’s not just theoretical — the time horizon of your target has to match the tempo of the universe you’re modeling.

Summary

Small Caps = Short-term bursts, noisy cycles, high dispersion

→ Use 3M total return to capture fast-moving alpha.Big Caps = Long-term trends, relative strength vs. peers, persistent flows

→ Use 12M relative return to align with institutional behavior.

By choosing the right target for the right universe, your model isn’t just predicting — it’s speaking the native language of the market segment you’re trading.

Let your small cap models be nimble. Let your big cap models be patient.

Both can win — but only if you listen to the tempo.

How to Interpret AI Factor Model Feature Importance (The Right Way)

When you look at feature importance in your AI-driven quant model, it’s tempting to think, “Oh, this factor is most important — I should just focus on that!” But that’s not how these models work. Especially with tree-based models like LightGBM or ExtraTrees, feature importance is not about single factors in isolation. It’s about how features work together, in non-linear ways, across different market regimes.

Let’s break it down.

🧠 1. Feature Importance Reflects Interactions, Not Solo Power

High feature importance doesn’t mean a factor is strong on its own. It usually means:

“This feature plays a big role in a lot of powerful combinations.”

For example, "Sales Growth TTM" might not consistently beat the market by itself. But maybe it becomes incredibly predictive when combined with low debt or positive EPS revisions. The AI model sees this — and ranks it as important because of the interaction, not the solo effect.

⚡ 2. It Captures Thresholds, Not Just “More is Better”

Traditional models struggle to say “this factor only matters above or below a threshold.” AI models, on the other hand, love thresholds.

For example:

EBITDA Yield might only drive alpha when it’s above 8%

High Insider Ownership might only help if debt is under 50%

These non-linear threshold effects are invisible in standard rank systems. But AI models learn them automatically. That’s what feature importance is flagging — “this variable helps when conditions are just right.”

🔗 3. It Learns Synthetic Factors You Didn’t Handcraft

You might notice several related features all showing up with medium importance:

EPS Growth

Sales Growth

Free Cash Flow Margin

ROIC

What’s happening here?

The model is building a synthetic factor. Maybe it’s learning to recognize “high-quality growth with strong margins.” These composite signals emerge naturally. You didn’t tell it to — it discovered the cluster on its own.

This is where AI models shine. Traditional ranking systems would need you to hand-engineer that combo manually.

🔀 4. Importance Depends on Context — Not All Conditions

A feature might only matter in narrow, high-signal windows. That means it could still rank highly in feature importance — even if it doesn’t “work” most of the time.

For example:

"Low volatility" might only generate alpha in bear markets

"Value" might only work when combined with positive estimate revisions

So you can’t judge a factor just because it doesn’t always work. Importance means: “when this activates, it matters a lot.”

🌦️ 5. Your Model Might Be Learning Macro Regime Sensitivity

If you trained your model using zscore(..., #Date) or other per-period inputs, the model might implicitly learn different regimes:

“Momentum works post-crisis”

“Quality dominates in slowdowns”

“Value comes alive after inflation peaks”

That dynamic behavior is buried inside the model's tree structure — not visible in the raw feature importance, but it drives the decisions.

This is a massive upgrade from traditional static rank systems.

🔍 6. The Best Use of Importance? Reverse-Engineer Combinations

The magic happens when you start asking:

“What combination is the model trying to tell me?”

If you see:

High importance for EPS Surprise

Medium importance for Low Beta

Strong role for Price-to-Sales

You might be looking at a “stable growth surprise” cluster. Try testing it manually, or building a screen around that logic. You’ll uncover what your model has discovered — sometimes things you never would’ve tried.

💬 Final Thought: Importance Is a Clue, Not a Rule

Feature importance gives you clues about what the AI model is learning — but it’s only a surface-level view. The real power lies underneath:

In the interactions

The thresholds

The emergent themes

The ability to change with the market

So next time you check feature importance, ask yourself:

“What’s the story behind these features?”

That’s where the alpha lives.